Revolutionizing Remote Sensing with AI: Automating Workflows with ChatGPT

Remote Sensing ChatGPT

Sohom Pal

AI-ML Engineer (Z-III), Founder-in-Residence, Zemuria Inc.

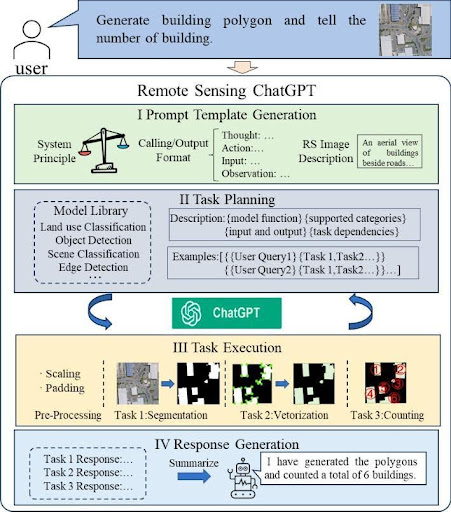

1. Introduction to Remote Sensing ChatGPT

1.1 Rethinking Remote Sensing with Language Models

For the longest time, remote sensing—whether for urban monitoring, agriculture,

or disaster management—required domain-specific expertise and carefully crafted tools.

However, Remote Sensing ChatGPT brings something exciting to the table: it combines LLMs ,

like ChatGPT, with remote sensing models to automate complex

workflows.

The idea is straightforward: instead of manually deciding what steps to take, Remote Sensing ChatGPT can interpret user queries, plan tasks, and execute them autonomously. This approach opens up new possibilities but also raises some important questions. How reliable is it? Can it handle edge cases? And what happens if it encounters tasks beyond its capacity? Let's dive deeper into what makes this system both promising and challenging.

1.2 Why Automating Task Planning Is a Game-Changer

One of the toughest parts of working with remote sensing data isn’t just running models—it’s

figuring out which models to use and in what order. For example, let’s say you want to know

how many airplanes are parked on a runway from satellite imagery. This requires several steps:

segmenting the runway, detecting airplanes, and then counting them.

Right now, that kind of workflow needs human planning and expertise. Remote Sensing ChatGPT simplifies the entire process. It understands queries in natural language and figures out which models to apply automatically.

This is a big deal because it can:

- Reduce the reliance on specialists.

- Speed up the analysis process especially for time-sensitive scenarios like disaster response.

- Make remote sensing accessible to more users from researchers in other fields to small organizations without large technical teams.

2. Challenges in Remote Sensing Automation

2.1 The Challenge of Visual and Task Diversity

One of the most exciting aspects of this project is the use of visual models like BLIP

to give ChatGPT the ability to understand remote sensing images. However, remote sensing

data is very different from typical images—satellite images capture things like vegetation

indices, infrared data, and elevation models.

The tricky part? Many remote sensing tasks require highly specialized models. You can’t just slap an off-the-shelf image classifier onto a multispectral image and expect it to work.

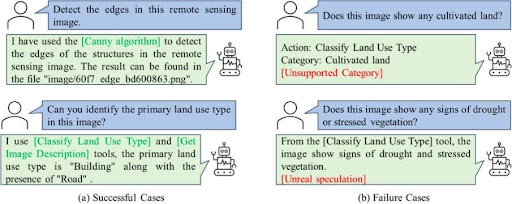

Remote Sensing ChatGPT does a good job by supporting models for scene classification, object detection, and edge detection. But what happens when a user asks something it doesn’t support?

The paper shows examples where the system misinterpreted queries or tried to “imagine”

answers when it lacked the right tools. For example, when asked to classify cultivated land,

it failed because the pre-trained models didn’t support that category. This makes me wonder:

- How can we build better fallback mechanisms?

- Should the system ask for more information or notify users about its limitations rather than guessing?

- Would it make sense to integrate open-vocabulary models or allow users to extend the system with custom models?

2.2 Failure Cases and Room for Improvement

No system is perfect, and Remote Sensing ChatGPT is no exception. While it performs well in many scenarios, some cases reveal critical limitations. For example:

- Unsupported Categories: It couldn’t classify cultivated land because the underlying land-use model wasn’t trained on that category.

- Over-Confidence: In cases where it lacked the right data, it guessed answers rather than requesting more input or alerting users.

What’s the solution here? I think adding better error-handling mechanisms could go a long way. For instance:

- If the system encounters a task it can’t handle, it could suggest alternatives or ask clarifying questions.

- It could give users transparency about which models are being used and their limitations, instead of pretending to know everything.

This would not only improve the user experience but also increase trust in the system’s outputs.

3. Solutions to Current Challenges

3.1 How Can We Build Better Fallback Mechanisms?

To build better fallback mechanisms for Remote Sensing ChatGPT, we need to address two key issues: unsupported tasks and over-confidence in answers. The current system has

shown that when it encounters queries it doesn’t fully understand or lacks the appropriate tools for, it tends to either guess the answer or return incomplete results. Here’s a breakdown of some potential solutions to these challenges:

3.1.1 Introduce a Query Clarification Loop

Instead of guessing, the system could initiate a feedback loop when it detects that the user query is outside its current capability. For example:

- If a model cannot identify a particular land-use type (like “cultivated land”), it could ask the user for more specific input or clarification.

- It could also suggest alternative, supported categories: “The model does not recognize ‘cultivated land.’ Would you like me to check for agricultural areas instead?”

This approach would prevent incorrect outputs and engage the user in task planning, leading to more reliable results.

3.1.2 Transparent Model and Capability Disclosure

A significant issue arises when the system tries to perform a task it can’t handle. The solution here is transparency:

- Remote Sensing ChatGPT could notify users upfront about the models being used and their known limitations.

- For example, when calling a land-use model, the system could mention: “This model classifies agricultural and urban areas but may not detect cultivated land.”

This way, users have a better understanding of what to expect, and trust in the system's outputs increases.

3.1.3 Graceful Error Handling and Suggesting Alternatives

The fallback mechanism could offer alternatives when a query fails.

- If object detection tools don’t support a specific object, the system could suggest switching to a more general model or a related task: “I couldn’t find ‘aircraft hangars,’ but I can count airplanes if that helps.”

This strategy would reduce frustration and ensure the user still gains valuable insights, even if the original task is not fully achievable.

3.1.4 Open-Ended Query Resolution

When all else fails, the system should avoid imaginative answers (as reported in some cases) and instead return an open-ended resolution:

- “I’m unable to detect the object you requested with the current model. Would you like me to try another approach or refine your query?”

This keeps the interaction moving forward without misleading the user with fabricated information.

3.1.5 Integrate External Model Suggestions or Extensions

Remote Sensing ChatGPT could allow user-driven model customization or integration of external APIs. If a query falls outside the scope of available models, the system could suggest:

- “This model doesn’t cover your task, but you could upload a specialized model, or connect to external tools for better results.”

This would provide flexibility, especially for niche tasks, and reduce limitations on what the system can accomplish

3.2 Should the System Ask for More Information or Notify Users About Its Limitations Rather Than Guessing?

Yes, absolutely. Asking for more information or notifying users about limitations is a much

better strategy than guessing. Here’s why, and how it can be implemented effectively in Remote Sensing ChatGPT.

3.2.1 Why Asking or Notifying Is Better Than Guessing

- Avoiding Misinformation

When the system guesses without enough information or proper tools, it risks providing incorrect results, which can erode user trust. Especially in critical applications like disaster response or urban monitoring, a wrong output can have serious real-world consequences. - Building Transparency and Trust

By informing users of what the system can and can’t do, the interaction becomes more transparent. Users are more likely to trust a system that admits when it doesn’t have the right tools rather than one that makes things up. - Engaging Users in Task Refinement

When the system asks for clarification or additional input, it invites users into the process of refining their queries. This not only leads to more accurate results but also improves user experience by helping users understand what’s possible with the available tools.

3.2.2 How the System Can Ask or Notify Effectively

- Request Clarification When Queries Are Ambiguous

If a query can’t be answered with available models (e.g., asking to classify "cultivated land" when the system lacks that category), the system should ask:- “I’m unable to identify ‘cultivated land.’ Could you provide more detail or choose from available categories like ‘agricultural area’ or ‘urban zone’?”

- Notify Users of Model Capabilities

Before running a task, the system could list the models being used and their limitations. For example:- “I’ll use the YOLOv5 model for object detection. Please note that this model may not detect smaller objects like vehicles accurately.”

This sets clear expectations upfront, preventing confusion or disappointment if the results don’t match what the user imagined.

- Provide Suggestions or Alternatives When a Task Fails

If a task isn’t possible, instead of guessing, the system could suggest other actions:- “I couldn’t detect aircraft hangars, but I can count the airplanes. Would that be helpful?”

This turns potential failures into opportunities for alternative insights.

- Implement a “Limit Reached” Response

In cases where the system runs out of tools or information, it should respond honestly:- “I don’t have the data or models to fully answer your question. Would you like to try a different approach or refine your query?”

This ensures the interaction ends gracefully, without confusing or misleading the user.

This ensures the user is engaged in the resolution process rather than receiving a misleading response.

4. Advanced Approaches and Technologies

4.1 Picking the Right Models: Turbo Outperforms GPT-4

The team behind Remote Sensing ChatGPT tested different ChatGPT backbones, including gpt-3.5-turbo and gpt-4. Surprisingly, gpt-3.5-turbo performed better with a 94.9%

task-planning success rate, while gpt-4 didn’t fare as well. This highlights an interesting trade-off—the latest, most advanced models aren’t always the best for every application.

This makes sense if you think about it. gpt-3.5-turbo might have fewer parameters but

is more efficient at processing instructions, which is critical for task planning.

There’s a lesson here: sometimes, optimization and focus matter more than raw power.

This raises a bigger question: should we always chase the newest models, or should we focus more on fine-tuning and optimizing what works best for the task at hand?

4.2 Would It Make Sense to Integrate Open-Vocabulary Models or Allow Users to Extend the System with Custom Models?

Yes, absolutely. Integrating open-vocabulary models and allowing users to extend the system with custom models would greatly enhance the flexibility, adaptability, and usability

of Remote Sensing ChatGPT. Here’s why it makes sense and how this could be implemented.

4.2.1 Why Open-Vocabulary Models Would Be a Game-Changer

Open-vocabulary models are designed to recognize and classify objects, scenes, or entities without being limited to predefined categories. In the context of remote sensing, this could solve one of the biggest

challenges Remote Sensing ChatGPT faces: handling niche or unseen queries.

- Handling Diverse Queries

- Remote sensing covers a wide range of use cases, from deforestation monitoring to infrastructure assessment. Pretrained models with limited categories can’t keep up with the variety.

- An open-vocabulary model could, for example, detect uncommon land-use types or specific objects without requiring new model training each time.

- Reducing Failure Cases

- Open-vocabulary models can provide reasonable guesses for previously unseen objects by generalizing based on similarities.

- This could prevent situations where Remote Sensing ChatGPT struggles with categories like “cultivated land,” which weren’t included in the training data.

- Improving User Experience

- Users won’t have to restrict their queries to known categories or worry about model limitations. They could ask more naturally, and the system would respond with more relevant insights, even for unexpected tasks.

4.3 Why Allowing Custom Models Is Crucial for Scalability

Remote sensing applications vary greatly across industries and use cases—agriculture, urban planning, disaster management, etc. It’s unrealistic to expect a single system to come

preloaded with models for every use case. Allowing users to upload and integrate custom models offers several advantages:

- Domain-Specific Expertise

- Different industries have specialized needs. A forestry researcher, for instance, might want to upload a custom model to detect specific tree species, which the standard model wouldn’t cover.

- By giving users the ability to plug in their models, the system becomes more useful across a broader range of fields.

- Continuous System Growth

- As users contribute their own models, the system could evolve into a community-driven platform where others can benefit from shared expertise.

- This flexibility ensures the system doesn’t stagnate and keeps growing without the need for constant developer intervention.

- More Robust Error Handling

- When a query isn’t solvable by the existing models, the system could suggest: “This task isn’t supported with current models. You can upload a custom model to handle this query.”

- This keeps the user engaged and offers a pathway forward, rather than leaving them stuck with unsatisfactory answers.

4.4 Implementing Open-Vocabulary and Custom Models: A Hybrid Approach

- Open-Vocabulary as a Default

- Integrate open-vocabulary models like CLIP or BLIP by default. This would give the system a solid baseline to recognize objects and scenes beyond fixed categories.

- These models could act as a first pass: if they confidently classify the query, the system can move on. If not, it could suggest using more specialized models.

- Custom Model Integration with a User-Friendly Interface

- Develop a simple interface where users can upload and manage their own models.

- Provide clear guidelines on how to integrate models (e.g., input/output formats) so users can seamlessly extend the system’s capabilities.

- Model Sharing and Collaboration

- Create a repository or marketplace where users can share custom models. For example, a researcher working on urban development could publish a model for detecting parking lots, which others could reuse.

- This approach ensures the system benefits from collective knowledge, continually expanding its abilities.

5. Future Opportunities in Remote Sensing Automation

5.1 The Future of Automated Remote Sensing

Here are some things I think will push this field forward:

- Customizable Models: Letting users upload their own models or fine-tune existing ones could dramatically expand the system’s capabilities.

- Open-Vocabulary Classification: Models that aren’t constrained by pre-defined categories could handle a wider variety of tasks.

- Energy-Efficient AI: Running these models locally on edge devices (like drones) or with low power could unlock real-time applications for disaster management and agriculture.

5.2 A Path Forward: Error Handling and Learning from Queries

To further improve, the system could learn from failed queries . If Remote Sensing ChatGPT frequently receives requests for tasks it can’t handle (e.g., identifying specific crop types),

this feedback can guide future updates, such as integrating more advanced models or datasets.

5.3 What Are the Trade-Offs?

While open-vocabulary models and custom model integration offer many benefits, there are some challenges to consider:

- Performance Overhead: Open-vocabulary models can be computationally intensive, which may impact response time.

- User Errors: Allowing users to upload models introduces the risk of poorly trained or incompatible models affecting the quality of outputs.

- Maintenance: Managing a growing ecosystem of user-generated models may require additional infrastructure for version control and monitoring.

6. Automating Remote Sensing with Remote Sensing ChatGPT and its Challenges

Remote Sensing ChatGPT automates remote sensing tasks by interpreting user requests in natural

language and automatically determining the appropriate models and steps to execute. This significant

advancement eliminates the need for manual planning and expertise that were previously required.

Here’s how it works:

- User Input: Users pose questions or requests about remote sensing data in natural language, such as determining the number of airplanes on a runway from satellite imagery.

- Task Planning: Remote Sensing ChatGPT processes the user’s query and identifies the necessary models and steps to fulfill the request. For example, it might determine that segmentation of the runway, airplane detection, and counting are necessary for the airplane query.

- Model Execution: The system then automatically executes the identified models in the correct sequence to generate results.

This automation has the potential to:

- Reduce reliance on remote sensing specialists.

- Speed up analysis, which is crucial in time-sensitive situations like disaster response.

- Make remote sensing more accessible to a wider range of users, including researchers from other fields and smaller organizations without dedicated technical teams.

6.1 Challenges Faced by Remote Sensing ChatGPT:

- Visual and Task Diversity: Remote sensing data differs significantly from standard images, often including vegetation indices, infrared information, and elevation models. Many tasks demand highly specialized models, which limits the system when it encounters unsupported requests.

- Model Selection: The team observed that gpt-3.5-turbo outperformed gpt-4 in task planning, emphasizing the importance of task-specific model optimization.

- Failure Cases: The system faces limitations in unsupported categories and sometimes overconfidently guesses answers, highlighting the need for better fallback mechanisms.

6.2 Proposed Improvements:

- Fallback Mechanisms:

- Clarify queries when ambiguous and disclose model limitations.

- Suggest alternative approaches when a task fails or offer open-ended resolutions for better user interaction.

- Error Handling and User Interaction:

- Prioritize transparency over guessing and provide alternative insights if original tasks fail.

- Open-Vocabulary and Custom Models:

- Integrate open-vocabulary models (e.g., BLIP) as a baseline for diverse queries an d allow custom model integration for domain-specific needs.

6.3 Implementation and Benefits:

- Open-Vocabulary Models: These models handle diverse queries, reduce failure rates, and improve user experience by recognizing objects and scenes beyond predefined categories.

- Custom Model Integration: Supports scalability and user-specific needs, enabling continuous system growth and adaptability.

- Collaborative Model Sharing: A model repository fosters community contributions, expanding system capabilities.

6.4 Potential Trade-offs:

- Performance Overhead: Open-vocabulary models may impact response times.

- User Errors: Custom models may introduce quality variations.

- Maintenance: An ecosystem of user-generated models requires infrastructure for version control.

Final Thoughts

Right now, complex analyses require expensive tools, specialized knowledge, or both. But what if

anyone could just ask a question about satellite imagery and get useful insights back—quickly and without needing to be an expert?

That’s the future this system hints at. However, for it to truly reach that potential, we need

to address its current limitations—especially around handling unsupported tasks and managing user

expectations. The foundation is solid, but more work is needed to make it reliable for everyday use.

The key takeaway? Automating remote sensing is within reach, and the tools we develop today will

shape how accessible and impactful earth observation becomes in the future. With smarter planning,

customizable models, and better user interaction, we could soon have systems that not only answer questions but help solve real-world problems—from tracking deforestation to managing disaster recovery.